Intro

Hello, readers. Over the past 8 months, we (Mary Kidd and Dinah Handel) have been immersed in archives located at public media stations- New York Public Radio and CUNY Television. We’ve experienced firsthand some of the unique workflows created for the dissemination and archiving of public media, and feel that in light of the upcoming AAPB cohort, it might be useful to share some of the archiving and preservation challenges and solutions we’ve engaged with in our respective host institutions.

In this blog post, we will be addressing the very unique relationship existing between media archivists and the content creators, broadcast engineers, and other staff they support who are involved in supporting the day-to-day “live” media production life-cycle. We truly believe that strengthening these relationships is key to successful long-term digital preservation at any public media station.

Mary

For the past nine months I have been working on a Digital Preservation Roadmap that will conclude with several recommendations to help The Archive improve existing workflows. This blog post will cover one of the recommendations I am putting forth that uses the Master Control broadcast schedule and airchecks to ensure that The Archive accomplishes their goal to save all finished broadcast audio files produced by NYPR. By “finished”, I mean the final mix of a high-resolution (44.1k 16-bit sample rate) broadcast WAV file played out on air.

How radio is played out automatically on air

In the early days of radio, a DJ or host or sound engineer would queue up the physical media and manually play them out in a specific sequence while live on-air. Since then, broadcasts have become largely automated. NYPR uses a combination of both scheduling and broadcast production management software to achieve the seamless radio programming you can tune into today.

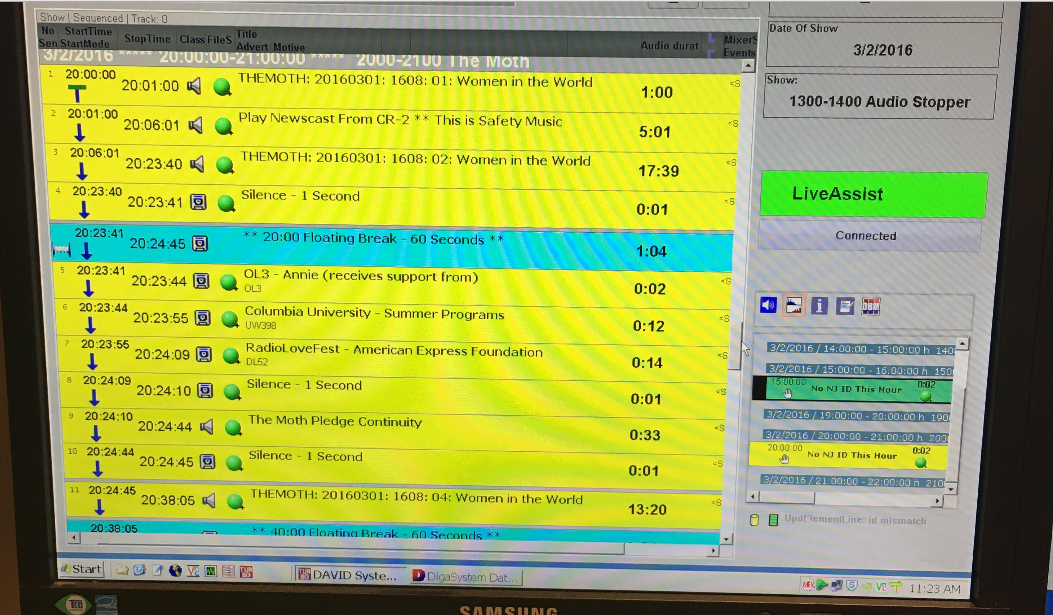

The scheduler is used to plot out the day’s/week’s broadcasts. On screen, the schedule basically looks just like a calendar or a task list, where each box corresponds to a certain time block, also known as an “air window”. It is important to know that each show is assigned its own unique ID. You will see in a bit how that plays into how the scheduler works.

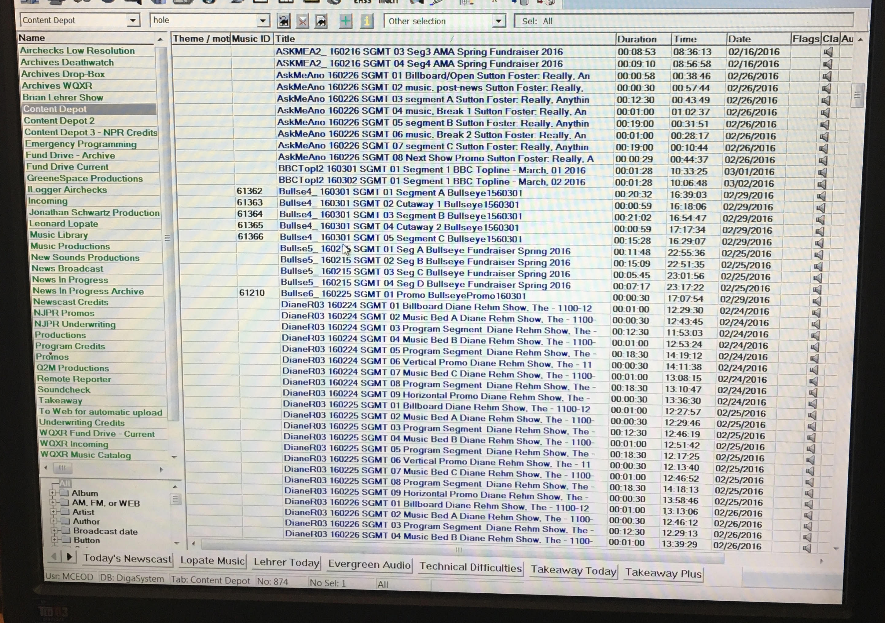

This gives you an idea of what a schedule looks like up-close. You can see that even moments of silence are allotted their own specific time slot alongside shows.

The actual audio files broadcast on air are stored and managed using the broadcast production management software called DAVID. DAVID is also used by The Archive as a repository for its born-digital holdings. Each audio file is represented by a DAVID record, which is where the unique show ID I mentioned above is stored. Based on the current air window, the scheduler trolls DAVID for a match on (basically) unique ID + date + start/end time combo. When it finds a match, it plays out the audio. When the audio ends, the scheduler moves on to the next air window in sequence and trolls DAVID for the next audio file to play out on air. And that is, in a nutshell, how automated playout works.

Master Control

Broadcasts are (thankfully) monitored 24/7 by engineers who work in a room called “Master Control”. Master Control is kind of like the air traffic control tower at an airport. Similar to an air traffic controller who must be aware of all aircraft within their jurisdiction at all times by monitoring radar, a Master Control engineer must be monitoring all broadcast outputs to ensure that there is no “dead air”. Basically, they work around the clock to ensure the ongoing integrity of the audio being played out at all times. I actually had an opportunity to interview a Master Control engineer for my report NDSR interview. Having never stepped inside a MC room ever, I was totally blown away. There are monitors everywhere, all playing at once, from classical music, to talk radio, to a live stream of a press conference. To get you an idea of how trippy this is, here is a clip I made with everything going all at once!

https://soundcloud.com/keyshape/control-center

Aircheck, Check, 1, 2, 3….

In addition to monitoring broadcasts, Master Control also manages a series of ingest servers that actively record all broadcasts 24/7. Airchecks are useful for a number of reasons: namely, the resulting digital files are a copy of all live broadcasts, so they are essentially a backup. They can also be used for legal purposes as well as for reference. An important aspect of NYPR’s aircheck broadcast workflow is that their ingest servers are actually creating two different types of airchecks: an in-house aircheck and an off-air aircheck. The in-house aircheck makes a copy of the audio signal before being broadcast to air. The quality of these types of airchecks are the highest quality audio possible, because it is basically a 1:1 copy of the broadcast WAV file. The off-air aircheck, which is literally recorded off air, is of a lower sound quality.

Where the airchecks go

After the in-house high-quality airchecks are recorded by an ingest server, they are routed into a folder in DAVID called “Incoming” and are auto-cataloged with a date, duration, some technical metadata and sometimes, a slug (a slug is basically a short unique name given to a show or a piece of news). Sometimes, if Master Control receives a special request to record a live stream of a debate or a press conference, they will hand-key in their own metadata to make it easier for reporters and archivists to find. The Incoming folder is a bit of a doozy, though: every 9 days it is purged. Deleted. Finito. Well, okay. Not exactly. There are backups made of airchecks on CD. There are scripts moving some items automatically into the archival folders. But it’s good to know that this folder is ephemeral.

So, how can a Master Control schedule make an radio archivist’s life easier?

At the moment, The Archive’s goal is to keep and preserve all finished broadcast audio content. To do this, they have worked together with Broadcast Engineers to create a series of scripts that are able to recognize and route individual files from folders managed by and worked out of by producers. But these scripts do not always capture everything, and where scripts fall short, archivists make up by manually reviewing and triaging audio files to make sure they get where they need to go. They are literally eye-balling the DAVID folders every single day, which takes a lot of time and effort. But humans make errors. And scripts sometimes fail, too! Also, producers very often forget to input in the correct (or any) title/slug into a DAVID record. Master Control Engineers may occasionally mis-catalog a special press conference recording. And archivists are prone to mis-cataloging items, as well. As you can see, there is a lot of room for error. As an archivist, I thought to myself, there must be a better way!

More colorful Master Control room racks.

The recommendation!

My recommendation is for The Archive to use the broadcast scheduler as a sort of master checklist to determine what should be ingest into their repository.

How this could work:

- High-quality audio files recorded from the aircheck ingest server are recorded into a new folder called “Archives Incoming”, much like how they are recorded into the “Incoming” folder.

- Each day, an automated SQL query checks the “Archives Incoming” folder against the Master Control broadcast schedule. This generates a report that is sent to The Archive for review. If there are any gaps in what they received vs. the schedule, they can go into the “Incoming” folder and retrieve the audio they need.

- This solution can also be used for auto-cataloging. In fact, the scheduler already does this for some shows so likely it can be used to do this for all air windows with a little tweaking on the back-end.

- Also, this relieves The Archive of the burden of having to keep up with the broadcast schedule, which is constantly changing. By integrating the archival workflow with the live schedule, archivists are guaranteed to be always getting what’s current.

Any archivist working in a production-driven environment knows that it’s never a simple process balancing The Archive’s goals with content creator-driven deadlines. Radio is a fast-paced environment and solutions are not always so apparent when there are so many disparate systems working with one another, sometimes in obscure ways. There is always stuff being output every minute of every hour of every day. My recommendation is an attempt to simplify an otherwise highly complex process of people and scripts sifting through multiple folders by using the broadcast schedule and resulting airchecks as the be-all and end-all of what gets routed into the repository. Of course there will be exceptions to this process, but it is a way to cut down on some of the manual work.

You will see as we continue on with Dinah’s take on the archive-producer-engineering that great things can happen when The Archives leverage existing technical infrastructures to achieve the goal of supporting long-term preservation. Achieving this means understanding and exposing workflows and then directly addressing where The Archives fit within them so that everyone is on the same page (unlike our friend Frasier here).

Dinah

At CUNY Television, which is the largest university television station in the country, the library and archives are embedded in the production environment. Put simply, all material created for broadcast must first go through the archive before it is aired on television. I think I’ve probably mentioned this aspect in previous blog posts, but it is fundamental to how we structure our workflow and archival practices. In the following sections, I’m going to talk about some of the challenges of being an archive embedded in a production environment, and how these challenges require us to modify or supplement our workflows. Specifically, I’ll touch on the implications of a transition from tape-based to file-based workflow, the multitude of types of content that the library and archives must manage, storage bloat and redundancy, and the ways in which digital preservation standards, specifically the archival information package, don’t necessarily map well to The Archive’s local needs.

?➡️??

Here is how things worked before we had a file-based workflow: we broadcasted shows from tape. Producers would record edited shows to a tape (the master control room supported xdcam, digibeta, betacam sp and betacam sx tapes for broadcast), Oksana, the broadcast librarian and CUNY TV library and archives OG, would create a schedule based on the programming director’s specifications, and every two days would take the physical tapes from shelves in the library (now the office in which we work), load them onto a cart, and then bring them into the master control room for the operators to broadcast.

Fast forward to 2012 and the transition to a file-based workflow begins. A few major things happened with regards to our infrastructure that allowed for this transition to take place:

- No more xdcams! Previous to 2012, on-site producers and editors had been exporting two versions of shows- the xdcam disc for the library and an HD file for our webteam for upload. The library proposed that instead of the xdcam disc, they would take the file delivered to the webteam. By obtaining the file rather than the disc, the library would have the preservation master file. This allowed the library the ability to create and distribute derivative copies, which is useful for facilitating access but also allowed us to insert ourselves into other workflows. Now, our ingest script involves sending a derivative of the master file to the webteam instead of producers or editors delivering it themselves.

- Use a server! Another impact of a file-based workflow was the increased use of the server we broadcast from, called the omneon, which serves as the temporary storage location for our broadcast copies of files.

- Use FTP! In addition, the library and archives set up a FTP for delivery of shows from off-site, meaning that people from each of the different CUNY campuses, which are located far and wide across the 5 boroughs, no longer had to hand-deliver tapes to the CUNY Television studios.

In addition to these infrastructure changes, Dave Rice, the manager of the library and archives, began to develop media microservices, which have grown into the primary means for the transcoding and dissemination of access copies.

But, just because we have transitioned from tape to files doesn’t mean that we never have to deal with tapes again! In fact, many other public broadcasting stations still use a tape-based workflow, including one that we distribute to. This means that we have to modify our workflow to accommodate external needs. Recently we had to build out a new dissemination workflow for a show that we broadcast. This included adding colorbars, a slate, and 30 seconds of black at the end, before recording? that show onto a Betacam SP tape to send to the distributor, so they could broadcast it. Watch this mesmerizing colorbars gif while the previous sentence sinks in…

Once we recovered from realizing we had to make a modification to incorporate tapes, we got to work adapting our workflow. Dave created a script called makeslate that does an interactive question and response with the user through terminal to obtain the necessary information for the slate, adds the colorbars, slate, and 30 seconds of black to a video file input. Then, I incorporated it into our workflow by adding it into our microservice scripts. Presently, our ingest script, ingestfile, has an option that asks the user if they want to create a copy with the slate. If the user chooses yes, the script creates a copy with the slate and places it into the same directory within the package as the service copy for broadcast.

So many types of material and content!

these puppies represent the flood of content that moves through the library daily, although a more accurate gif would include many types of puppies

Another aspect of being part of a production environment is the multitude of types of material we attempt to catalog and store, each of which has a different workflow. They mostly fall into four categories:

- Material for broadcast: This is the most simple type of material we work with. This material consists of completed television shows that are delivered to the library by editors. With this material, we run our ingest script and create all derivatives, metadata, and package it into an Archival Information Package. These materials have listings in our scheduling software, ProTrack, which reports to our Filemaker database for descriptive metadata. Archival Information Packages are written to LTO for long term storage.

- Digitized and migrated shows: Remember earlier when I said that we transitioned from a tape-based to file-based workflow in 2012? That means that all the material from 1985-2012 was on tape and needed to be digitized or migrated to digital files. We’ve also acquired special collections material that dates back even further. This is an ongoing project, and like most other archives, we have a decent backlog of materials. With this material, we also run our ingest script, which I had to modify to include the additional metadata documents created from digitization. While there are legacy metadata records that have been imported into our Filemaker database, these have to be supplemented with additional cataloging. These Archival Information Packages are also written to LTO tape for long term storage.

- Remote, raw, and b-roll footage: For many shows, producers record footage off-site, of events, visits to locations profiled in shows, or other types of content onto xdcam discs or sxs cards. This content is then edited by producers and used in final broadcast shows. The archive obtains this footage because we have the sxs card and xdcam readers, so we get the data off the cards and then make a file that we deliver back to producers. These files contain embedded technical metadata, and are also cataloged by hand into our Filemaker database, linked to the completed shows when possible, and eventually written to LTO for long term storage. Currently, we don’t have a good mechanism for packaging these materials for long-term storage like we do for files that we broadcast.

- Line cuts: Line cuts are the assembly of studio feed recordings by the technical director. We only preserve some of these. Similar to the raw, remote, and b-roll footage, we don’t have a mechanism for creating Archival Information Packages for this type of footage.

Storage bloat

?

Managing all of this content can feel a bit unruly, especially when it comes to monitoring space on servers- of which there are many. We are able to write a lot of packaged content to LTO, which frees space that is then quickly filled by new content. However, there is a lot of material that is stored on our production server that we recently realized was duplicate, about 20 TB to be more precise. This happens because the production server is not easily searchable except by filename, and so each producer finds the content they want and makes their own copy in their own directory with a different filename. Our solution for this is to try and implement our Digital Asset Management system synced up with production folders, so that instead of many copies for individual producers in disparate sub-directories, there is only one copy of any given file in a searchable database. Producers would then be able to locate the file in the finder, ideally cutting down on redundancy.

Active aips

The concept of an “active” Archival Information Package is one that has plagued me since the early days of my residency. As I understand it, Archival Information Packages are typically conceived of as final, discrete, complete entities that get sent off to long term storage, never to be seen again. Contrary to this idea, we revisit our Archival Information Packages constantly, as they contain derivative copies that we upload for broadcast. We also create and disseminate our Dissemination Information Packages before our Archival Information Package is complete, which disrupts the linear timeline posed by the OAIS Reference Model. My experience with CUNY TV’s Archival Information Packages and the instruction on the OAIS Reference Model certainly makes me rethink how some digital preservation standards aren’t well suited for more non-traditional archives.

Conclusion

We hope that some of the experiences and solutions we’ve shared in this blog post are insightful, or even helpful to those of you working in archives that interface with production teams and media creators. We’re both eagerly anticipating the work that upcoming AAPB NDS Resident’s do in their respective host institutions, and hope that they continue to shed light on archival workflows at public media stations. And, if you work at a public media station, or are an archivist embedded in a production environment, we’d love to hear about some of the challenges you face in the comments!

Signing off…